Fully connected ANN with a hidden layer

The Artificial Neuron

Neuron's Definition Functions

Activation Functions

Time Delay Neural Network (TDNN)

TDNN Step 1

TDNN Step 2

TDNN Step 3

TDNN Step 101

Crossvalidation technique

Representation of prediction method using ANNs

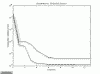

Training graph

Prediction with ANN - Control

Prediction with ANN - OCD

One can observe the neuron's inputs (p), the weights (w), the adder (Σ), the activation function (f) and the ouptut (a)

Functions that describe neuron k. For more details look at the previous image

A: Linear

B: Hard Limit

Γ: Log-Sigmoid

Δ: Hyperbolic Tangent Sigmoid

Elementary TDNN with m inputs and k delays for each input.

An example of Time Delay Neural Network in time series prediction.

The network is able to predict the future values of a time-series when appropriately trained.

After each prediction a shift occurs in the inputs and each prediction is re-feeded to the network.

Look as Steps 2, 3 & 101 for more details

An example of Time Delay Neural Network in time series prediction.

The network is able to predict the future values of a time-series when appropriately trained.

After each prediction a shift occurs in the inputs and each prediction is re-feeded to the network.

Look as Steps 1, 3 & 101 for more details

An example of Time Delay Neural Network in time series prediction.

The network is able to predict the future values of a time-series when appropriately trained.

After each prediction a shift occurs in the inputs and each prediction is re-feeded to the network.

Look as Steps 1, 2 & 101 for more details

An example of Time Delay Neural Network in time series prediction.

The network is able to predict the future values of a time-series when appropriately trained.

After each prediction a shift occurs in the inputs and each prediction is re-feeded to the network.

Look as Steps 1, 2 & 3 for more details

This technique is widely used in almost all fields of science. It is employed...

...whenever the data size is relatively small

...as a mean of validation of results

1st column:

Building appropriate matrices for each data group.

2nd column:

Building and training networks with crossvalidation technique.

i.e. for 30 subjects there would be 30 different networks.

3rd column:

Part of each subject is feeded to the appropriate network of each group.

The subject is classified to a group according to Mean Square Error.

Graphical representation of training.

The blue line shows the output error of the network when feeded with the training data, in relation to the number of training epochs.

The green line shows the output error of the network when feeded with the validation data (data used to avoid overtraining the network for a specific data set).

Predicting the ERP signal of an OCD using the Controls NN.

Ideally the Mean Square Error will be large so that the subject will be classified corectly.

(Wait some secs - the picture is an animated gif)

Predicting the ERP signal of an OCD using the OCD NN.

Ideally the Mean Square Error will be smaller than the MSE resulted from the Controls NN, so that the subject will be classified corectly.

(Wait some secs - the picture is an animated gif)

|

Fully connected ANN with a hidden layer